A Byte Of Your Intelligence: Are We Smarter, Dumber, Or Just Differently Wired?

Parsing the Impact of Technology and AI on Human Intelligence.

Christian Lous Lange, the eminent Norwegian historian, once pithily observed that “Technology is a useful servant but a dangerous master.” As we spin our digital webs ever wider, I can’t help but revisit this sentiment. His words hold greater relevance today as we fall into the labyrinth of digital advances that have transformed our lives.

It’s high time we critically examined the elephant in the room: Are our leaps in technology and artificial intelligence (AI) making us smarter, less intelligent, or undermining our skills in critical thinking and problem-solving?

Firstly, the ‘smarter’ argument. Technology has democratised information like never before. The Internet, often hailed as the world’s largest open university, offers an extensive and diverse repository of knowledge, accessible at a moment’s notice. Whether you want to explore the perplexing domains of quantum physics, understand the geopolitics of the Middle East, or simply perfect your sourdough recipe, the Internet is ready with answers. Technology’s role in learning has extended to the development of AI tools, which are progressively personalising and enhancing the educational experience, moulding pedagogy to fit the needs of individual learners1.

This explosion of accessible knowledge is a compelling endorsement for the ‘smarter’ hypothesis, but it’s not the only one. Technological advances and AI have gifted us with abilities that border on the superhuman. Take DeepMind’s AlphaGo, for instance, this AI not only managed to defeat a human world champion in the complex game of Go, but it did so employing strategies never before conceived by humans2. In this sense, technology doesn’t merely supplement our intelligence; it pushes the boundaries of what we thought possible, stretching our cognitive horizons.

But the road to this digital utopia is not without its potholes. Cue the ‘dumber’ camp. Nicholas Carr, in his insightful book “The Shallows,” presents an argument that the Internet is altering our neural pathways, diminishing our capacity for deep reading, contemplation, and long-term memory retention3. The incessant hum of notifications, the relentless feed of new content, and the continuous lure of the ‘next big thing’ have created a digital environment where distraction is the norm, and concentration, an elusive goal.

The other concern within the ‘dumber’ camp is cognitive offloading. As we increasingly rely on external devices for information, we risk weakening our innate cognitive abilities4. Think about your last road trip. Did you rely on a GPS device or your sense of direction? With technology at our fingertips, turn-by-turn navigation is only a click away. But this convenience might be subtly eroding our innate spatial skills and orientation abilities.

When it comes to critical thinking and problem-solving, technology has the potential to both enhance and impair these skills. On one hand, digital tools provide platforms that are ripe for collaboration and problem-solving. Video games, for instance, are found to improve problem-solving and strategic thinking5.

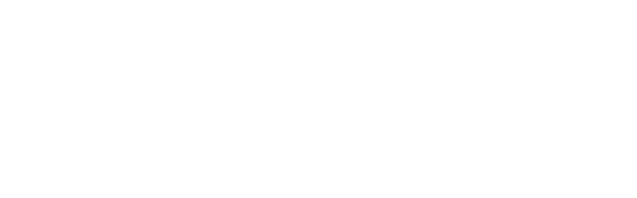

Yet, the tide can turn when we consider the realm of social media. The often unverified and polarised content on these platforms can inhibit critical thinking and encourage cognitive biases. Eli Pariser’s concept of the ‘filter bubble’ encapsulates this – our digital algorithms are designed to show us more of what we like, trapping us in echo chambers that limit exposure to diverse perspectives and stifle critical thinking6. On the other hand, the often unverified and polarised content on social media can hinder critical thinking, pushing us towards cognitive biases and echo chambers.

Given this dialectic, it’s clear that the human-technology relationship isn’t as simple as ‘smarter’ or ‘dumber.’ It’s a complex, ever-evolving dance where the steps are continually changing. Perhaps we’re not becoming more or less intelligent, but instead, we’re developing a new form of intelligence that thrives on the synergy between human cognition and machine capability.

Ultimately, I think we must do more than merely spectate this transformation. We should actively guide it, ensuring that our tech-driven future empowers us rather than undermines us. Our goal should not be to create a world where AI usurps us but one where it helps us realise our fullest potential.

In the end, the impact of technology and AI on our intelligence hinges on how we use these tools. As we move forward, it’s vital that we make conscious choices that optimise our intellectual growth and critical thinking abilities, even as we reap the benefits of the digital revolution.

Here’s to a future where we don’t just survive in the digital age but flourish, wielding technology as a tool to augment, not replace, our innate cognitive abilities.

References:

- Bain, A. (2010). The democratizing power of the internet. The Educational Forum.

- Carr, N. (2011). The Shallows: What the Internet is Doing to Our Brains. WW Norton & Company.

- Silver, D., et al. (2016). Mastering the game of Go with deep neural networks and tree search. Nature.

- Sparrow, B., Liu, J., & Wegner, D. M. (2011). Google effects on memory: Cognitive consequences of having information at our fingertips..

- Granic, I., Lobel, A., & Engels, R. C. (2014). The benefits of playing video games. American Psychologist.

- Pariser, E. (2011). The Filter Bubble: What the Internet is Hiding from You. Penguin UK.